Photo by Beatriz Pérez Moya on Unsplash

What is S3 and boto3 by the way?

Amazon provides a Simple Storage Service , widely known as Amazon S3 , that is a highly scalable, fast, and durable solution for object-level storage of any data type. Amazon S3 does not store files in a file system like a conventional operating system, instead, it stores files as objects.

Amazon S3 allows you to store data using a logical hierarchy driven by keywords. It forms a folder structure which makes it easy to navigate files. And, the best part is that your root folder, a.k.a S3 bucket, name is globally unique. Static website hosting, data archival, and software delivery are a few general scenarios where S3 would be a perfect tool.

You can easily push and pull data with S3 using the AWS utilities like AWS SDK or CLI. SDKs come in a number of popular programming languages, including python --where boto3 comes into the picture.

boto3 is the Amazon Web Services (AWS) SDK for Python. It enables Python developers to create, configure, and manage AWS services, such as EC2, S3, etc. It provides an easy to use, object-oriented API, as well as low-level access to AWS services.

and…Django?

Quickly head out to one of our previous articles, A Brief Intro: Python and Django , covering basic overview of Django

Introduction

Over the years, the Self-Storage Industry has grown into a 38 billion dollar colossal. There are more than 47,539 self-storage facilities in the United States. There are tons of complex logistical operations involved in the background.

We here at CodeParva Technologies are on a voyage to build highly configurable, scalable, high-performing enterprise web applications to cater to the needs of the ever-demanding self-storage industry and make their complex operations to be handled smoothly.

So when we talk of an Industry this big — there’s a lot of data at stake. It can be any paperwork such as business letters, transactional documents, financial reports or any user related media information. We need to manage all of it and manage them well. We need a way to store and retrieve them easily whenever needed and that’s how we caught sight of S3 .

Why use S3?

There’s always an option to put data and files on your own, maybe dedicated, servers. It works pretty well and is surely cheap. But the problem or rather the challenge over here is that you need to make sure that these documents are secure, backed up properly, and accessible 24x7 from anywhere.

Fun Fact [PS. READ IT AT YOUR OWN RISK]: Lot of people use personal servers to backup their data. But not many of them know how to make it secure enough. Due to this their data is easily available to hackers and sometimes even to mere search engines also. “DCIM” is the name commonly used by Android devices for the camera images folder. Just go on google and search for “index of: DCIM”. The results will shock you how much personal data is just lying there without any security

In such cases it is better to give security and maintenance work to the professionals. It may cost a little more, but the benefits are proportionally high.

We also did something on a similar note, and opted for a third-party service provider such as Amazon S3 . It comes with a lot of included benefits such as low cost for benefits, availability, security, simple data transfer, easy integration, and a lot more to make our lives easier.

To list out, below are some of the benefits you get with S3:

- Simple and Scalable

- All-time Availability

- Durability and Accessibility

- Cost-Effective Storage

- Versioning and Auto-Upgrades

- Powerful Security

- Backed up by Amazon

Now, let’s see how you can use boto3 to develop something like this for your own.

First of all, to use S3 you need an AWS account. If you already have an AWS account proceed ahead, otherwise use this link and create an account for yourself.

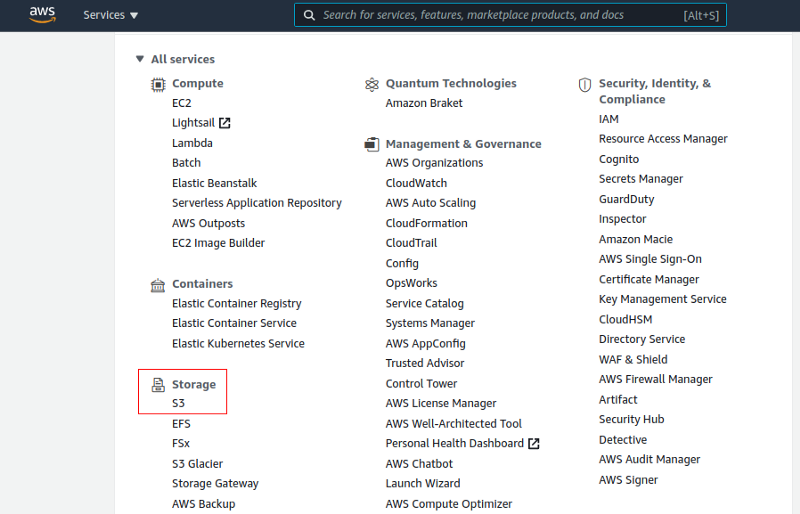

Now login to your account and on the home page search for “S3” under All Service >> Storage. Click on it and navigate to the S3 dashboard and create a new bucket that will contain our uploads.

Source: AWS Console Home

Source: S3 Console Buttons

boto3 in Action:

Once done with the above steps, you need to have these secret credentials provided by AWS in order to use boto3 ( refer AWS docs for creating and storing these creds) :

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYAWS_S3_REGION_NAME

>> Install boto3 in your project environment:

:~$ pip install boto3

1. Creating AWS session:

Here, using the AWS credentials we have created a session object to interact with the AWS and to operate on its various resources.

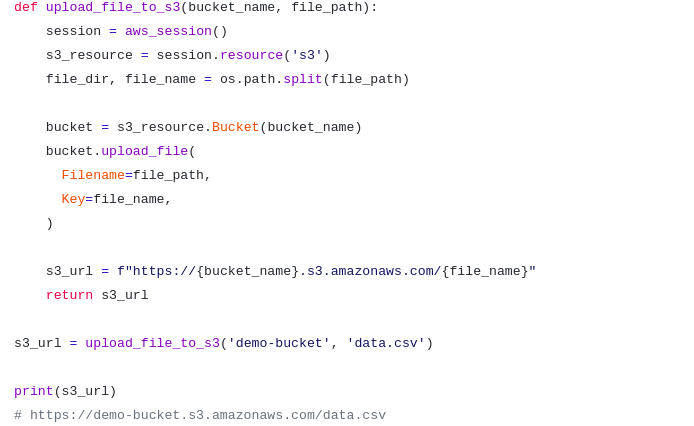

2. Uploading a file:

This function uploads the given file to the specified bucket and returns the AWS S3 resource URL (for accessing it). Here we’re using the upload_file method having two key parameters.

- The first one specifies the intended path to upload a file

- And, the second one is called ‘key’ that acts as a unique identifier for the file (as a object) in the S3 bucket.

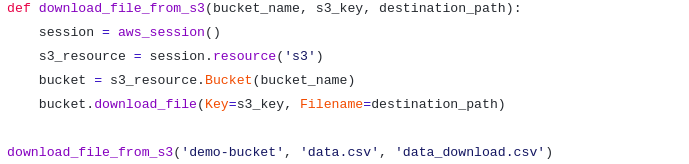

3. Downloading a file:

This method is very similar to upload, except here we use the download_file method.

These three are the pivotal methods to handle basic file storage operations. These methods can be bundled on to any of your services to store data. However, this is just the tip of the iceberg. There’s a lot more one can explore by going to the boto3 documentation (link given below).

Conclusion

In this article, we have seen how simple it is to integrate AWS SDK (boto3) with your Django project to leverage the power of S3, and effortlessly tackle data storage challenges. Not only file storage services, but using boto3 one can access and utilize a lot of AWS services in python code, from sending messages to SNS to managing EC2 instances to accessing CloudWatch.

We at CodeParva are leveraging the power of Python & Django integrating it with AWS services to build our own seamless and smooth solution for the global self-storage industry. Join us here

Visit CodeParva Technologies to know more about us. Linkedin | Instagram